As I’ve grown older, I’ve come to realize again and again that black-and-white thinking is one of the most unproductive and misleading ways of looking at the world.

“Political Party X is wrong. College is always a good investment. Notes must be taken on notebook paper. You’ve got to take a stance on this issue. Decide now!”

One of the many problems with this approach is the issue of precision. When asked for an opinion on a certain issue, how can you make that judgement without knowing the issue’s details?

Many issues are deceptively simple when presented in the form that humans understand intuitively; that is, when they’re presented in language.

Our ability to communicate using language is rooted deeply in the human brain’s practice of creating heuristics – assigning symbolic labels to complex concepts, thereby simplifying them.

We do this naturally, so it’s easy to slip in the practice of thinking that a concept’s linguistic representation is an accurate gauge of its simplicity. With just a bit of critical thinking, though, you realize that this definitely isn’t the case.

The human brain is – as far as we can observe – the most complex thing in the universe. Therefore, its intuitive understanding of concepts isn’t a good gauge of the actual concepts in all their true complexity.

Take, for instance, this idea:

“A triangular room made of pink Jell-o expanding in cubic centimeters at the rate of one Fibonacci number per second in which Indiana Jones is doing battle with a talking hippo with lightsabers and jetpacks propelled by cold fusion”

Language, as we’ve established, is a fantastic tool for assigning simple labels to complex concepts so that they can be communicated between agents that have a similar understanding of those concepts. So I can actually represent this idea with a word, such as “florglarp.”

Then, I can ask a seemingly-simple question:

“Do you believe in florglarp?”

Now, you know that I just made that word up. You have a better intuitive understanding of all the individual concepts I’m representing with “florglarp,” so it’s probably easy for you to see that this concept’s complexity can’t be accurately conveyed with that word.

But most of the words you use in everyday life are words you’ve known for a long time. They’re words that have also been made up, but they’ve been accepted into the lexicon of society. As a result, you have a better intuitive understanding of these heuristical words than you do of the actual, precise concepts they represent.

For instance, even though I used the word “triangular room,” your brain probably had an easy time representing the idea as a room in the shape of a tetrahedron. However, without further consideration, you probably did not intuitively think of the concept as a necessarily imperfect tetrahedron in which the edges never form true vertices due to electromagnetism.

With practice, it becomes easier to identify complex, nuanced concepts that are represented by overly simplistic labels. You get to the point where I can ask you something like,

“Can a tetrahedron exist in our physical universe?”

…and you immediately realize that the question can’t be accurately answered until the concepts behind the question are more rigorously defined.

I like the way the artificial intelligence writer Eliezer Yudkowsky embodies this concept in his article on Occam’s Razor:

“The more complex an explanation is, the more evidence you need just to find it in belief-space.”

Occam’s Razor, you might know, is a problem-solving principle that favors explanations that make the fewest assumptions. You might have heard it phrased as, “The simplest explanation that fits the facts is likely the correct one.”

Yudkowsky points out in his article that the science fiction writer Robert Heinlein replied that the simplest explanation is always,

“The lady down the street is a witch; she did it.”

Note: I couldn’t find any other references online to verify that Heinlein actually said this, but it’s a good illustration.

Heinlein’s supposed reply is succinct in terms of human language; however, as I’ve just demonstrated, human language isn’t actually a good measure of simplicity.

So what could possibly be used as an accurate measure for simplicity?

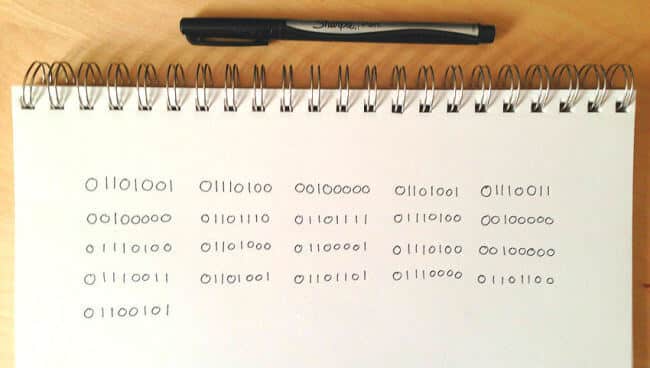

As it turns out, the answer is right in front of your face. Binary, the language that forms of the foundation of every computer program, is as simple as you can get.

The binary alphabet has just two characters: 0 and 1, which represent the off and on states of an electrical switch respectively.

At the lowest level, computers always think in black and white – because that’s the only possible way for them to think. Their alphabet only allows for one of two states to ever be expressed at a specific time.

These binary decisions are done at lightning-quick speed in mind-boggling numbers, compiled into higher-level languages, run through lots of if-then statements, and eventually come to represent more complex data that’s useful to you – but at the core, it’s all just, “On or off?”

Here’s how it’s useful: If you can make an idea computable, then you can figure out the length of the shortest computer program that can express it. In fact, this is what the process of Solomonoff Induction seeks to do.

When you think about things in this way, it quickly becomes apparent that, “The lady down the street is a witch; she did it,” is not the simplest explanation with the fewest assumptions. The word “witch” doesn’t explain anything; a piece of data is evidence if and only if it excludes many possibilities. Data that permits anything is just useless noise.

I’ve explained this concept just to get you thinking about the actual complexity of ideas and how that relates to language and heuristics. I’m not suggesting that you try to do Solomonoff Induction calculations on every decision you have to make, or that you attempt to ditch heuristics – and here’s why.

Heuristics and labels are often useful. They let us use our prior knowledge of the world to make quick, mostly accurate predictions about things. In turn, we can take action and get on with our lives.

I’m sitting in a coffee shop as I write this. If I look out the window, I can see a black car parked on the other side of the parking lot.

Sitting here, I can quickly make a prediction that the car poses no threat to me right now. I can assign a 99.9% probability that it will not come barreling through the coffee shop wall and kill me.

Luckily, I don’t have to sit around trying to rigorously define the concepts of “car”, “threat”, and “coffee shop wall” in order to come to this conclusion. I have prior knowledge:

- The car is parked and no one is inside it

- Most cars do not move by themselves (yet)

- In my part of the world, people don’t generally try to kill other people

- Even if they did, they probably wouldn’t try to do it with a car if their target is inside a building

- The brick wall of the coffee shop would probably stop the car anyway, since there isn’t much room in the lot to gain speed

These priors give me useful heuristics with which to make the prediction in a split second – letting me get back to writing without fear of being crushed by a rogue car and a collapsing brick wall.

However, it’s important for me to realize that I am relying on heuristics to make this prediction. I haven’t considered every piece of evidence in detail, and as a result I cannot assign a 100% probability to my prediction.

If I were to attempt a Bayesian probability calculation for it, I’d use these heuristics as strongly predictive priors – but then I’d need to define them, consider cases where they might not be true, and adjust accordingly to get a more accurate prediction.

What if the car actually is self-driving? What if I have an inaccurate mental model of the parking lot, and there actually is enough space for the car to gain the velocity necessary to knock down the brick wall?

Alone, the fact that the Second Law of Thermodynamics is probabilistic in nature – i.e. that macroscopic decreases in entropy are only “virtually” impossible – means that I can never be 100% certain of my prediction.

Still, I can be “virtually” certain that I’m in no danger of being killed by the car while I sit here. Even after defining the concepts more precisely and considering the evidence more closely, my heuristics still prove useful enough to bet my safety on.

But here’s the point: Your heuristics are not always that useful.

My beliefs about the car are rooted in my detailed knowledge of the surrounding area (I live around here), my extensive experiences with the types of people who live around here (they’re typically not Twisted Metal characters), and my confidence in the fairly consistent nature of physics.

What happens when your beliefs are not as solidly grounded? Here’s where we get back to the usefulness of thinking about the underlying assumptions we’re making when we form beliefs.

Since I mentioned Robert Heinlein earlier, let me use him once more as an example. It has been speculated that Hanlon’s Razor – a term inspired by Occam’s Razor – originated from a short story he wrote called Logic of Empire. Here’s the simplest wording:

“Never attribute to malice that which is adequately explained by stupidity.” | Tweet This

This is one of my favorite sayings, and as a concept it has proved incredibly useful in keeping my personal relationships from deteriorating.

When someone does something that angers or hurts you, it’s easy to attribute the action to malice. We do it almost automatically, and I think it’s safe to assume there’s some useful evolutionary programming we can attribute that to:

- If something hurts me, it probably wants to eat/kill/hurt/take resources from me

- If something is nice to me, it’s probably part of my group

In a natural environment, this black-and-white heuristic is very useful – acting upon its conclusions can often mean the difference between life and death.

Unfortunately, this same heuristic that governs the conclusions you come to when a tiger springs out of a bush also governs what you think when your friends go to the movies and forget to invite you.

“How dare they not invite me? They must hate me!”

In this case, your brain presents you with a false dilemma. “I thought my friends liked me, but they didn’t invite me to the movies.”

This isn’t a case of a tiger trying to eat you; any number of non-malicious factors could have been involved in the result:

- Your friends were unorganized and didn’t assign someone to do all the invitations

- You didn’t happen to be around and it was just a spontaneous event

- Someone tried to call you but your phone was dead

- The cell tower lost the call, so it didn’t even reach your phone

…the list goes on.

So here’s the takeaway.

Heuristics and labels are useful, but they only get you so far. Their very nature encourages you to overly simplify and categorize concepts that may be much more nuanced and detailed than you initially realize.

Therefore, when forming opinions and making decisions, it’s a good idea to question your underlying assumptions and think about possible in-between states that exist on the spectrum between the two initial positions you were presented with. Get specific with the definitions of the concepts that support your opinions.

Now, this doesn’t meant that your initial position on an issue will always be overturned or invalidated after you’ve rigorously defined the concepts it depends on. Your position on it might remain the same.

However, you might just find yourself changing your mind. Either way, this type of deliberate thinking is focused on forming accurate beliefs and will make you a wiser, more capable person.

When you learn to marry heuristics and precise, Solomonoff-inspired thinking in an optimal way, you get the best of both worlds.